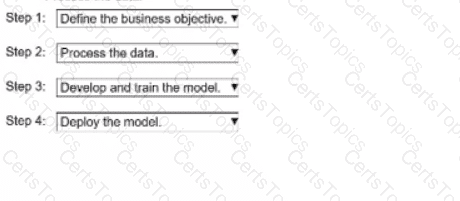

Step 1: Define the business objective.

Step 2: Process the data.

Step 3: Develop and train the model.

Step 4: Deploy the model.

The correct order represents the machine learning lifecycle as defined by AWS in the Amazon SageMaker documentation and AWS Certified Machine Learning Specialty Study Guide. The lifecycle describes the sequence of tasks required to build, train, and deploy a custom ML model effectively.

From AWS documentation:

"The machine learning process begins with defining the business problem, followed by collecting and processing data, developing and training models, and finally deploying them into production for inference."

Step 1 – Define the business objective:

This step involves clearly identifying the business problem to be solved and determining the measurable outcomes expected from the ML model. This ensures alignment between business goals and ML outputs.

Step 2 – Process the data:

Data is collected, cleaned, transformed, and prepared for training. This includes handling missing values, normalizing data, and performing feature engineering — a crucial phase that influences model performance.

Step 3 – Develop and train the model:

The model is built and trained on the processed data using algorithms appropriate to the problem (e.g., regression, classification, clustering). Hyperparameters are tuned to optimize model accuracy.

Step 4 – Deploy the model:

Once validated, the model is deployed to a production environment (e.g., Amazon SageMaker endpoint) to make predictions on new data. Continuous monitoring and retraining ensure the model remains effective.

Referenced AWS AI/ML Documents and Study Guides:

Amazon SageMaker Developer Guide – Machine Learning Lifecycle

AWS Certified Machine Learning Specialty Study Guide – Model Development Lifecycle

AWS ML Best Practices Whitepaper – End-to-End ML Workflow