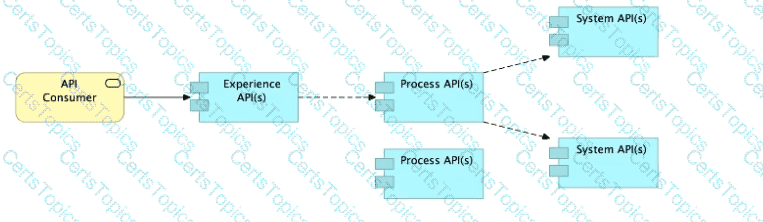

Refer to the exhibit.

What is the best way to decompose one end-to-end business process into a collaboration of Experience, Process, and System APIs?

A) Handle customizations for the end-user application at the Process API level rather than the Experience API level

B) Allow System APIs to return data that is NOT currently required by the identified Process or Experience APIs

C) Always use a tiered approach by creating exactly one API for each of the 3 layers (Experience, Process and System APIs)

D) Use a Process API to orchestrate calls to multiple System APIs, but NOT to other Process APIs

What condition requires using a CloudHub Dedicated Load Balancer?

What is true about where an API policy is defined in Anypoint Platform and how it is then applied to API instances?

A Rate Limiting policy is applied to an API implementation to protect the back-end system. Recently, there have been surges in demand that cause some API client

POST requests to the API implementation to be rejected with policy-related errors, causing delays and complications to the API clients.

How should the API policies that are applied to the API implementation be changed to reduce the frequency of errors returned to API clients, while still protecting the back-end

system?