26 of 55.

A data scientist at an e-commerce company is working with user data obtained from its subscriber database and has stored the data in a DataFrame df_user.

Before further processing, the data scientist wants to create another DataFrame df_user_non_pii and store only the non-PII columns.

The PII columns in df_user are name, email, and birthdate.

Which code snippet can be used to meet this requirement?

39 of 55.

A Spark developer is developing a Spark application to monitor task performance across a cluster.

One requirement is to track the maximum processing time for tasks on each worker node and consolidate this information on the driver for further analysis.

Which technique should the developer use?

17 of 55.

A data engineer has noticed that upgrading the Spark version in their applications from Spark 3.0 to Spark 3.5 has improved the runtime of some scheduled Spark applications.

Looking further, the data engineer realizes that Adaptive Query Execution (AQE) is now enabled.

Which operation should AQE be implementing to automatically improve the Spark application performance?

21 of 55.

What is the behavior of the function date_sub(start, days) if a negative value is passed into the days parameter?

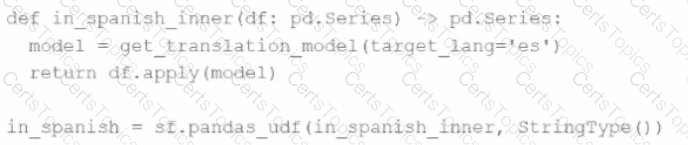

An MLOps engineer is building a Pandas UDF that applies a language model that translates English strings into Spanish. The initial code is loading the model on every call to the UDF, which is hurting the performance of the data pipeline.

The initial code is:

def in_spanish_inner(df: pd.Series) -> pd.Series:

model = get_translation_model(target_lang='es')

return df.apply(model)

in_spanish = sf.pandas_udf(in_spanish_inner, StringType())

How can the MLOps engineer change this code to reduce how many times the language model is loaded?

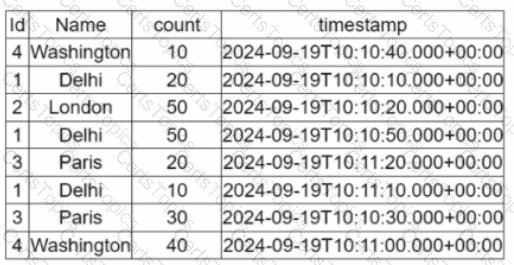

A data engineer is working on the DataFrame:

(Referring to the table image: it has columns Id, Name, count, and timestamp.)

Which code fragment should the engineer use to extract the unique values in the Name column into an alphabetically ordered list?

49 of 55.

In the code block below, aggDF contains aggregations on a streaming DataFrame:

aggDF.writeStream \

.format("console") \

.outputMode("???") \

.start()

Which output mode at line 3 ensures that the entire result table is written to the console during each trigger execution?

41 of 55.

A data engineer is working on the DataFrame df1 and wants the Name with the highest count to appear first (descending order by count), followed by the next highest, and so on.

The DataFrame has columns:

id | Name | count | timestamp

---------------------------------

1 | USA | 10

2 | India | 20

3 | England | 50

4 | India | 50

5 | France | 20

6 | India | 10

7 | USA | 30

8 | USA | 40

Which code fragment should the engineer use to sort the data in the Name and count columns?

35 of 55.

A data engineer is building a Structured Streaming pipeline and wants it to recover from failures or intentional shutdowns by continuing where it left off.

How can this be achieved?

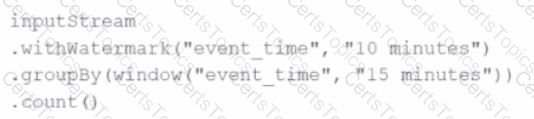

Given this code:

.withWatermark("event_time", "10 minutes")

.groupBy(window("event_time", "15 minutes"))

.count()

What happens to data that arrives after the watermark threshold?

Options:

10 of 55.

What is the benefit of using Pandas API on Spark for data transformations?

A data engineer is streaming data from Kafka and requires:

Minimal latency

Exactly-once processing guarantees

Which trigger mode should be used?

25 of 55.

A Data Analyst is working on employees_df and needs to add a new column where a 10% tax is calculated on the salary.

Additionally, the DataFrame contains the column age, which is not needed.

Which code fragment adds the tax column and removes the age column?

Which command overwrites an existing JSON file when writing a DataFrame?

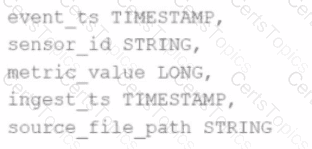

Given the schema:

event_ts TIMESTAMP,

sensor_id STRING,

metric_value LONG,

ingest_ts TIMESTAMP,

source_file_path STRING

The goal is to deduplicate based on: event_ts, sensor_id, and metric_value.

Options:

A DataFrame df has columns name, age, and salary. The developer needs to sort the DataFrame by age in ascending order and salary in descending order.

Which code snippet meets the requirement of the developer?

48 of 55.

A data engineer needs to join multiple DataFrames and has written the following code:

from pyspark.sql.functions import broadcast

data1 = [(1, "A"), (2, "B")]

data2 = [(1, "X"), (2, "Y")]

data3 = [(1, "M"), (2, "N")]

df1 = spark.createDataFrame(data1, ["id", "val1"])

df2 = spark.createDataFrame(data2, ["id", "val2"])

df3 = spark.createDataFrame(data3, ["id", "val3"])

df_joined = df1.join(broadcast(df2), "id", "inner") \

.join(broadcast(df3), "id", "inner")

What will be the output of this code?

32 of 55.

A developer is creating a Spark application that performs multiple DataFrame transformations and actions. The developer wants to maintain optimal performance by properly managing the SparkSession.

How should the developer handle the SparkSession throughout the application?

38 of 55.

A data engineer is working with Spark SQL and has a large JSON file stored at /data/input.json.

The file contains records with varying schemas, and the engineer wants to create an external table in Spark SQL that:

Reads directly from /data/input.json.

Infers the schema automatically.

Merges differing schemas.

Which code snippet should the engineer use?

15 of 55.

A data engineer is working on a Streaming DataFrame (streaming_df) with the following streaming data:

id

name

count

timestamp

1

Delhi

20

2024-09-19T10:11

1

Delhi

50

2024-09-19T10:12

2

London

50

2024-09-19T10:15

3

Paris

30

2024-09-19T10:18

3

Paris

20

2024-09-19T10:20

4

Washington

10

2024-09-19T10:22

Which operation is supported with streaming_df?

6 of 55.

Which components of Apache Spark’s Architecture are responsible for carrying out tasks when assigned to them?

24 of 55.

Which code should be used to display the schema of the Parquet file stored in the location events.parquet?

30 of 55.

A data engineer is working on a num_df DataFrame and has a Python UDF defined as:

def cube_func(val):

return val * val * val

Which code fragment registers and uses this UDF as a Spark SQL function to work with the DataFrame num_df?

A developer notices that all the post-shuffle partitions in a dataset are smaller than the value set for spark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold.

Which type of join will Adaptive Query Execution (AQE) choose in this case?

What is the risk associated with this operation when converting a large Pandas API on Spark DataFrame back to a Pandas DataFrame?

A data engineer has been asked to produce a Parquet table which is overwritten every day with the latest data. The downstream consumer of this Parquet table has a hard requirement that the data in this table is produced with all records sorted by the market_time field.

Which line of Spark code will produce a Parquet table that meets these requirements?

A data scientist is working with a Spark DataFrame called customerDF that contains customer information. The DataFrame has a column named email with customer email addresses. The data scientist needs to split this column into username and domain parts.

Which code snippet splits the email column into username and domain columns?

27 of 55.

A data engineer needs to add all the rows from one table to all the rows from another, but not all the columns in the first table exist in the second table.

The error message is:

AnalysisException: UNION can only be performed on tables with the same number of columns.

The existing code is:

au_df.union(nz_df)

The DataFrame au_df has one extra column that does not exist in the DataFrame nz_df, but otherwise both DataFrames have the same column names and data types.

What should the data engineer fix in the code to ensure the combined DataFrame can be produced as expected?

Which feature of Spark Connect is considered when designing an application to enable remote interaction with the Spark cluster?

A data scientist is working on a large dataset in Apache Spark using PySpark. The data scientist has a DataFrame df with columns user_id, product_id, and purchase_amount and needs to perform some operations on this data efficiently.

Which sequence of operations results in transformations that require a shuffle followed by transformations that do not?

4 of 55.

A developer is working on a Spark application that processes a large dataset using SQL queries. Despite having a large cluster, the developer notices that the job is underutilizing the available resources. Executors remain idle for most of the time, and logs reveal that the number of tasks per stage is very low. The developer suspects that this is causing suboptimal cluster performance.

Which action should the developer take to improve cluster utilization?

47 of 55.

A data engineer has written the following code to join two DataFrames df1 and df2:

df1 = spark.read.csv("sales_data.csv")

df2 = spark.read.csv("product_data.csv")

df_joined = df1.join(df2, df1.product_id == df2.product_id)

The DataFrame df1 contains ~10 GB of sales data, and df2 contains ~8 MB of product data.

Which join strategy will Spark use?

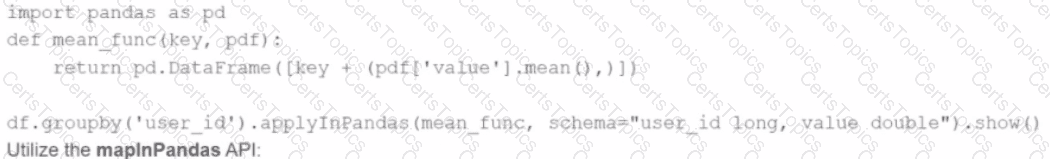

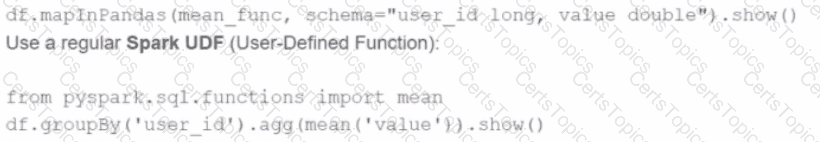

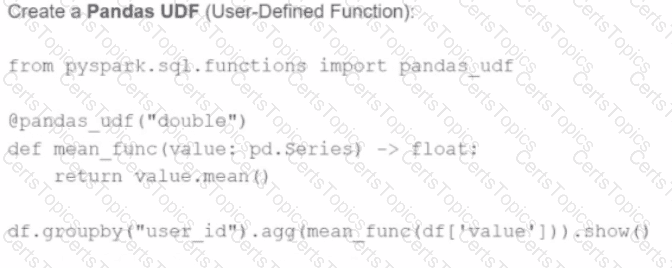

A developer is working with a pandas DataFrame containing user behavior data from a web application.

Which approach should be used for executing a groupBy operation in parallel across all workers in Apache Spark 3.5?

A)

Use the applylnPandas API

B)

C)

D)

23 of 55.

A data scientist is working with a massive dataset that exceeds the memory capacity of a single machine. The data scientist is considering using Apache Spark™ instead of traditional single-machine languages like standard Python scripts.

Which two advantages does Apache Spark™ offer over a normal single-machine language in this scenario? (Choose 2 answers)

34 of 55.

A data engineer is investigating a Spark cluster that is experiencing underutilization during scheduled batch jobs.

After checking the Spark logs, they noticed that tasks are often getting killed due to timeout errors, and there are several warnings about insufficient resources in the logs.

Which action should the engineer take to resolve the underutilization issue?

A developer is running Spark SQL queries and notices underutilization of resources. Executors are idle, and the number of tasks per stage is low.

What should the developer do to improve cluster utilization?

A data analyst wants to add a column date derived from a timestamp column.

Options:

What is the relationship between jobs, stages, and tasks during execution in Apache Spark?

Options:

You have:

DataFrame A: 128 GB of transactions

DataFrame B: 1 GB user lookup table

Which strategy is correct for broadcasting?

3 of 55. A data engineer observes that the upstream streaming source feeds the event table frequently and sends duplicate records. Upon analyzing the current production table, the data engineer found that the time difference in the event_timestamp column of the duplicate records is, at most, 30 minutes.

To remove the duplicates, the engineer adds the code:

df = df.withWatermark("event_timestamp", "30 minutes")

What is the result?