35 of 55.

A data engineer is building a Structured Streaming pipeline and wants it to recover from failures or intentional shutdowns by continuing where it left off.

How can this be achieved?

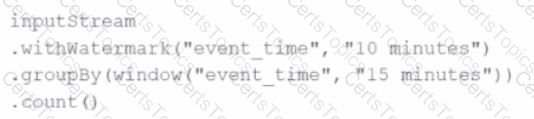

Given this code:

.withWatermark("event_time", "10 minutes")

.groupBy(window("event_time", "15 minutes"))

.count()

What happens to data that arrives after the watermark threshold?

Options:

10 of 55.

What is the benefit of using Pandas API on Spark for data transformations?

A data engineer is streaming data from Kafka and requires:

Minimal latency

Exactly-once processing guarantees

Which trigger mode should be used?