A developer is working with a pandas DataFrame containing user behavior data from a web application.

Which approach should be used for executing a groupBy operation in parallel across all workers in Apache Spark 3.5?

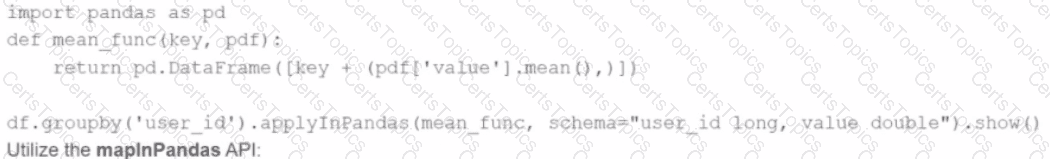

A)

Use the applylnPandas API

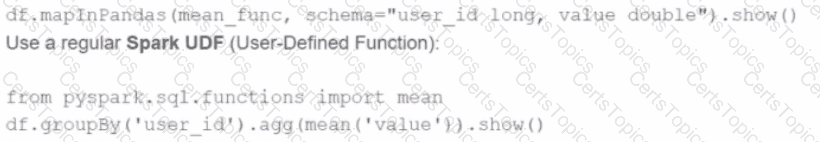

B)

C)

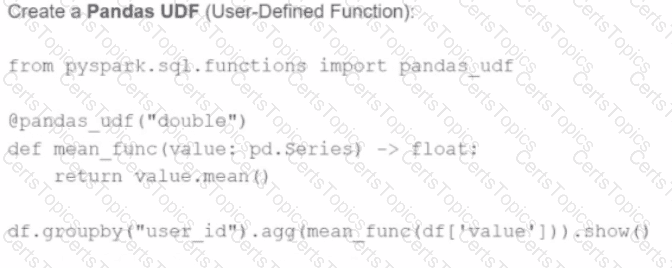

D)

23 of 55.

A data scientist is working with a massive dataset that exceeds the memory capacity of a single machine. The data scientist is considering using Apache Spark™ instead of traditional single-machine languages like standard Python scripts.

Which two advantages does Apache Spark™ offer over a normal single-machine language in this scenario? (Choose 2 answers)

34 of 55.

A data engineer is investigating a Spark cluster that is experiencing underutilization during scheduled batch jobs.

After checking the Spark logs, they noticed that tasks are often getting killed due to timeout errors, and there are several warnings about insufficient resources in the logs.

Which action should the engineer take to resolve the underutilization issue?

A developer is running Spark SQL queries and notices underutilization of resources. Executors are idle, and the number of tasks per stage is low.

What should the developer do to improve cluster utilization?